Getting Started¶

If you already have an image containing a QR code that you’d like to decode, the process is pretty straightforward.

Installation¶

deqr is published on PyPi with pre-built binaries for common platforms and

modern Python versions. It can be installed with pip:

pip install deqr

A Basic Exmaple¶

Decoding From an Image¶

The easiest way to decode QR codes from an image file is to use the external

OpenCV or Pillow libraries. These libraries are not shipped as dependencies

of deqr, so you have to manually install whichever one you want to use.

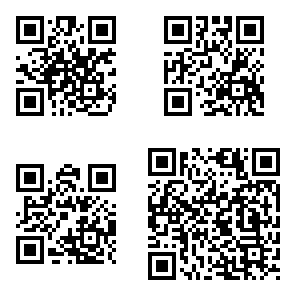

Given the following image, which is a directly generated image and therefore ideal for decoding:

This image contains codes with data in the four basic types: numeric, alphanumeric, byte, and kanji.

import cv2

import numpy

import deqr

image_data = cv2.imread("amalgam.png")

decoder = deqr.QRdecDecoder()

decoded_codes = decoder.decode(image_data)

import cv2

import numpy

import deqr

image_data = cv2.imread("amalgam.png")

decoder = deqr.QuircDecoder()

decoded_codes = decoder.decode(image_data)

import PIL.Image, PIL.ImageDraw, PIL.ImageFont

import deqr

image_data = PIL.Image.open("amalgam.png")

decoder = deqr.QRdecDecoder()

decoded_codes = decoder.decode(image_data)

import PIL.Image, PIL.ImageDraw, PIL.ImageFont

import deqr

image_data = PIL.Image.open("amalgam.png")

decoder = deqr.QuircDecoder()

decoded_codes = decoder.decode(image_data)

Note

deqr attempts to have sane defaults for data conversion while providing the

user with sufficient flexibility to deal with nonstandard QR codes or those

created with domain-specific data encoding. See

the deqr.QRdecDecoder.decode() documentation for more information

about how data conversion is performed.

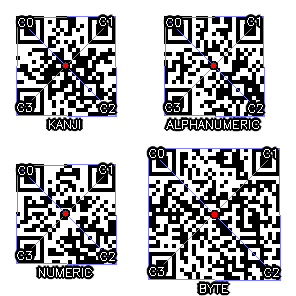

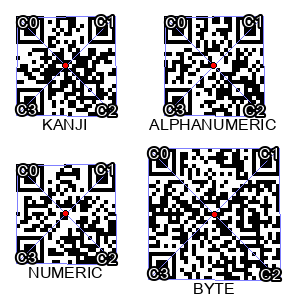

These examples have virtually identical outputs (the bounding box computation varies slightly between the decoders):

decoded_codes = [

QRCode(

version=2,

ecc_level=QREccLevel.H,

mask=4,

data_entries=(

QrCodeData(type=QRDataType.KANJI, data="こんにちは世界"),

),

corners=((17, 17), (115, 16), (116, 116), (16, 115)),

center=(65, 65),

),

QRCode(

version=2,

ecc_level=QREccLevel.H,

mask=5,

data_entries=(

QrCodeData(type=QRDataType.ALPHANUMERIC, data="HELLO WORLD"),

),

corners=((181, 17), (279, 16), (280, 116), (180, 115)),

center=(229, 65),

),

QRCode(

version=4,

ecc_level=QREccLevel.H,

mask=1,

data_entries=(

QrCodeData(

type=QRDataType.BYTE, data="https://github.com/torque/deqr"

),

),

corners=((148, 148), (279, 148), (280, 280), (148, 279)),

center=(214, 214).

),

QRCode(

version=2,

ecc_level=QREccLevel.H,

mask=3,

data_entries=(

QrCodeData(

type=QRDataType.NUMERIC, data=925315282350536773542486064879

),

),

corners=((17, 181), (115, 180), (116, 280), (16, 279)),

center=(65, 229),

),

]

Visualizing the Results¶

When trying to understand what the decoder is finding, it can be helpful to annotate the source image using the information returned by the decoder. Here, we show off the information we just decoded.

Note

If your source image is encoded as grayscale, you will not be able to draw

colored annotations on it without first converting it into a colorspace

with color channels. See the documentation of the annotation tool you are

using (e.g. PIL.Image.Image.convert()) for instruction on how to

accomplish that.

def draw_text(image, text, location, alignment):

font_face = cv2.FONT_HERSHEY_SIMPLEX

font_scale = 0.4

font_thickness = 1

line_type = cv2.LINE_AA

(width, height), baseline = cv2.getTextSize(

text, fontScale=font_scale, fontFace=font_face, thickness=font_thickness

)

# numpad-style alignment

alignment -= 1

location = (

location[0] + [0, -width // 2, -width][alignment % 3],

location[1] + [-baseline, height // 2, height][alignment // 3],

)

cv2.putText(

image,

text,

org=location,

fontFace=font_face,

fontScale=font_scale,

color=(0, 0, 0),

thickness=font_thickness + 3,

lineType=line_type,

)

cv2.putText(

image,

text,

org=location,

fontFace=font_face,

fontScale=font_scale,

color=(255, 255, 255),

thickness=font_thickness,

)

for code in decoded_codes:

box_color = (255, 127, 127)

cv2.polylines(

image_data,

[numpy.array(code.corners, dtype=numpy.int32)],

True,

color=box_color,

thickness=1,

)

cv2.line(image_data, code.corners[0], code.corners[2], color=box_color, thickness=1)

cv2.line(image_data, code.corners[1], code.corners[3], color=box_color, thickness=1)

cv2.circle(image_data, code.center, radius=3, color=(0, 0, 0), thickness=2)

cv2.circle(

image_data, code.center, radius=3, color=(0, 0, 255), thickness=cv2.FILLED

)

for idx, (corner, alignment) in enumerate(zip(code.corners, (7, 9, 3, 1))):

draw_text(image_data, f"C{idx}", corner, alignment)

entry = code.data_entries[0]

draw_text(image_data, entry.type.name, (code.center[0], code.corners[2][1] + 3), 8)

cv2.imwrite("amalgam-annotated-opencv-qrdec.png", image_data)

def draw_text(image, text, location, alignment):

font_face = cv2.FONT_HERSHEY_SIMPLEX

font_scale = 0.4

font_thickness = 1

line_type = cv2.LINE_AA

(width, height), baseline = cv2.getTextSize(

text, fontScale=font_scale, fontFace=font_face, thickness=font_thickness

)

# numpad-style alignment

alignment -= 1

location = (

location[0] + [0, -width // 2, -width][alignment % 3],

location[1] + [-baseline, height // 2, height][alignment // 3],

)

cv2.putText(

image,

text,

org=location,

fontFace=font_face,

fontScale=font_scale,

color=(0, 0, 0),

thickness=font_thickness + 3,

lineType=line_type,

)

cv2.putText(

image,

text,

org=location,

fontFace=font_face,

fontScale=font_scale,

color=(255, 255, 255),

thickness=font_thickness,

)

for code in decoded_codes:

box_color = (255, 127, 127)

cv2.polylines(

image_data,

[numpy.array(code.corners, dtype=numpy.int32)],

True,

color=box_color,

thickness=1,

)

cv2.line(image_data, code.corners[0], code.corners[2], color=box_color, thickness=1)

cv2.line(image_data, code.corners[1], code.corners[3], color=box_color, thickness=1)

cv2.circle(image_data, code.center, radius=3, color=(0, 0, 0), thickness=2)

cv2.circle(

image_data, code.center, radius=3, color=(0, 0, 255), thickness=cv2.FILLED

)

for idx, (corner, alignment) in enumerate(zip(code.corners, (7, 9, 3, 1))):

draw_text(image_data, f"C{idx}", corner, alignment)

entry = code.data_entries[0]

draw_text(image_data, entry.type.name, (code.center[0], code.corners[2][1] + 3), 8)

cv2.imwrite("amalgam-annotated-opencv-quirc.png", image_data)

drawer = PIL.ImageDraw.Draw(image_data)

def translate(point, x, y=None):

if y is None:

y = x

return (point[0] + x, point[1] + y)

font = PIL.ImageFont.truetype("Arial Unicode.ttf", 16)

for code in decoded_codes:

box_color = (127, 127, 255)

drawer.polygon(code.corners, outline=box_color)

drawer.line((code.corners[0], code.corners[2]), fill=box_color, width=1)

drawer.line((code.corners[1], code.corners[3]), fill=box_color, width=1)

drawer.ellipse(

(translate(code.center, -3), translate(code.center, 3)),

fill=(255, 0, 0),

outline=(0, 0, 0),

width=1,

)

for idx, (corner, anchor) in enumerate(zip(code.corners, ("lt", "rt", "rb", "lb"))):

drawer.text(

corner,

f"C{idx}",

font=font,

anchor=anchor,

fill=(255, 255, 255),

stroke_fill=(0, 0, 0),

stroke_width=2,

)

entry = code.data_entries[0]

drawer.text(

(code.center[0], code.corners[2][1] + 3),

entry.type.name,

font=font,

fill=(0, 0, 0),

anchor="mt",

)

image_data.save("amalgam-annotated-pillow-qrdec.png")

drawer = PIL.ImageDraw.Draw(image_data)

def translate(point, x, y=None):

if y is None:

y = x

return (point[0] + x, point[1] + y)

font = PIL.ImageFont.truetype("Arial Unicode.ttf", 16)

for code in decoded_codes:

box_color = (127, 127, 255)

drawer.polygon(code.corners, outline=box_color)

drawer.line((code.corners[0], code.corners[2]), fill=box_color, width=1)

drawer.line((code.corners[1], code.corners[3]), fill=box_color, width=1)

drawer.ellipse(

(translate(code.center, -3), translate(code.center, 3)),

fill=(255, 0, 0),

outline=(0, 0, 0),

width=1,

)

for idx, (corner, anchor) in enumerate(zip(code.corners, ("lt", "rt", "rb", "lb"))):

drawer.text(

corner,

f"C{idx}",

font=font,

anchor=anchor,

fill=(255, 255, 255),

stroke_fill=(0, 0, 0),

stroke_width=2,

)

entry = code.data_entries[0]

drawer.text(

(code.center[0], code.corners[2][1] + 3),

entry.type.name,

font=font,

fill=(0, 0, 0),

anchor="mt",

)

image_data.save("amalgam-annotated-pillow-quirc.png")

Practical Usage¶

QR codes were invented primarily to facilitate the process of transferring information from real, physical objects into a computer system. As such, images containing QR codes frequently have artifacts such as noise, blur, lens distortion, uneven lighting, and offset perspective and rotation along with other non-ideal characteristics that can impede the reliability of QR code decoding.

deqr ships with defaults that should perform reasonably well in real-world

scenarios.

Binarization¶

Binarization is the process of converting all pixels in an image to either completely white or completely black. Because the value of a QR code “bit” is dependent on whether it is light or dark, this process makes a dramatic difference in decoding rates in variable lighting conditions. The built-in binarization conversion performs an adaptive threshold that binarizes the image by comparing each pixel to a group of its neighbors. This approach compensates for images that have poor or somewhat uneven lighting.

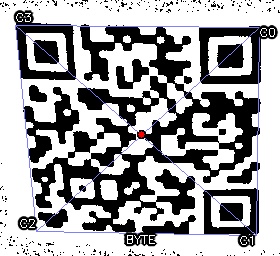

Demonstration¶

Below are two examples of successfully decoded images. The first shows the binarization dealing with poor lighting, and the second shows a somewhat blurry image featuring some minor lens distortion. The outputs were generated using the same scripts as the basic example above, with some minor modification to preserve the binarization for demonstration purposes. These images also both have the QR code rotated relative to its nominal orientation, illustrating that the bounding box corner ordering follows the nominal QR code orientation rather than the image orientation.

The inverted reflectance required by QRdec is also shown in the output.

Source |

QRdec |

Quirc |

|---|---|---|

|

|

|

|

|

|

Accessing Intermediates¶

While not particularly recommended, here’s an example of accessing the

intermediate binarization image by (ab)using the fact that it is an in-place

mutation and that deqr.image.ImageLoader is passed through the decode

process without being copied.

import cv2, numpy

import deqr

image_data = cv2.imread("dark.jpg")

img = deqr.image.ImageLoader(image_data)

deqr.QRdecDecoder().decode(img)

reshaped = numpy.reshape(img.data, (img.height, img.width))

cv2.imwrite("dark-binarized.jpg", reshaped)